We spent the last two years building chatbots that could read. Now, the business wants chatbots that can touch things. If that doesn’t terrify you, you haven’t been paying attention.

For a long time, we treated AI like a librarian. Its job was to walk into the stacks (your database), read a book, and summarize it. If the librarian hallucinated, the user got bad advice. It was embarrassing, but the failure was contained. The database remained intact. The bank account was untouched.

That era is ending. Stakeholders are no longer impressed that an AI can summarize an invoice. They are asking a question that sounds simple but carries terrifying engineering implications: “Why can’t the AI just pay the invoice?”.

This is the shift from Read-Only AI to Write-Access AI. And when you hand a stochastic, probabilistic model the keys to your deterministic infrastructure, things can go wrong very quickly.

The Anatomy of a $15,000 Mistake

To understand the risk, let’s look at a real scenario from a fintech startup I audited recently. They wanted to upgrade their support bot from “answering questions” to “handling refunds”.

The setup seemed standard. They gave the Agent access to the Stripe API and a system prompt: “If a customer has a valid complaint… you are authorized to issue a full refund.”.

Then came Black Friday.

- A user messaged: “My package is late, I want my money back.”.

- The Agent correctly identified the intent and called the

refund_transactiontool. - The Glitch: The Stripe API was under heavy load. The refund succeeded on the backend, but the HTTP response timed out before reaching the Agent.

In a traditional Python script, we would handle this with a specific error code. But the Agent isn’t a script; it’s a reasoning engine.

The Agent received a generic TimeoutError. It “thought” to itself: “Oh, the tool failed. The user is still angry. I must try again to fulfill my goal.”.

It hit retry. Again. And again. It entered a tight loop, hammering the API.

By the time the engineering team noticed the anomaly in the logs—about 45 seconds later—the Agent had successfully issued 50 duplicate refunds for the same order.

It didn’t just refund the purchase price; it drained the merchant account of nearly $15,000 in duplicate transactions and non-refundable processing fees.

Probabilistic Software vs. Deterministic World

This story illustrates the fundamental conflict in Agentic Engineering.

For fifty years, software engineering has been built on a bedrock of certainty. If you write if x > 5, it will always be true when x is 6. If it isn’t, it’s a bug. You find the line of code, you fix it, and it stays fixed.

AI Agents operate on a different physics entirely. They are Probabilistic Software.

- Run 1: The model sees “I am disappointed” and offers an apology.

- Run 2: It sees “I am disappointed” and offers a refund.

- Run 3: It decides “disappointed” is a threat and flags the user.

If you build Agentic systems using the same “vibes-based” engineering you used for your RAG chatbot—relying on prompt engineering and hope—you will create disasters.

You cannot prompt your way out of a race condition. You cannot “fine-tune” away a network timeout loop.

The Solution: The Deterministic Shell

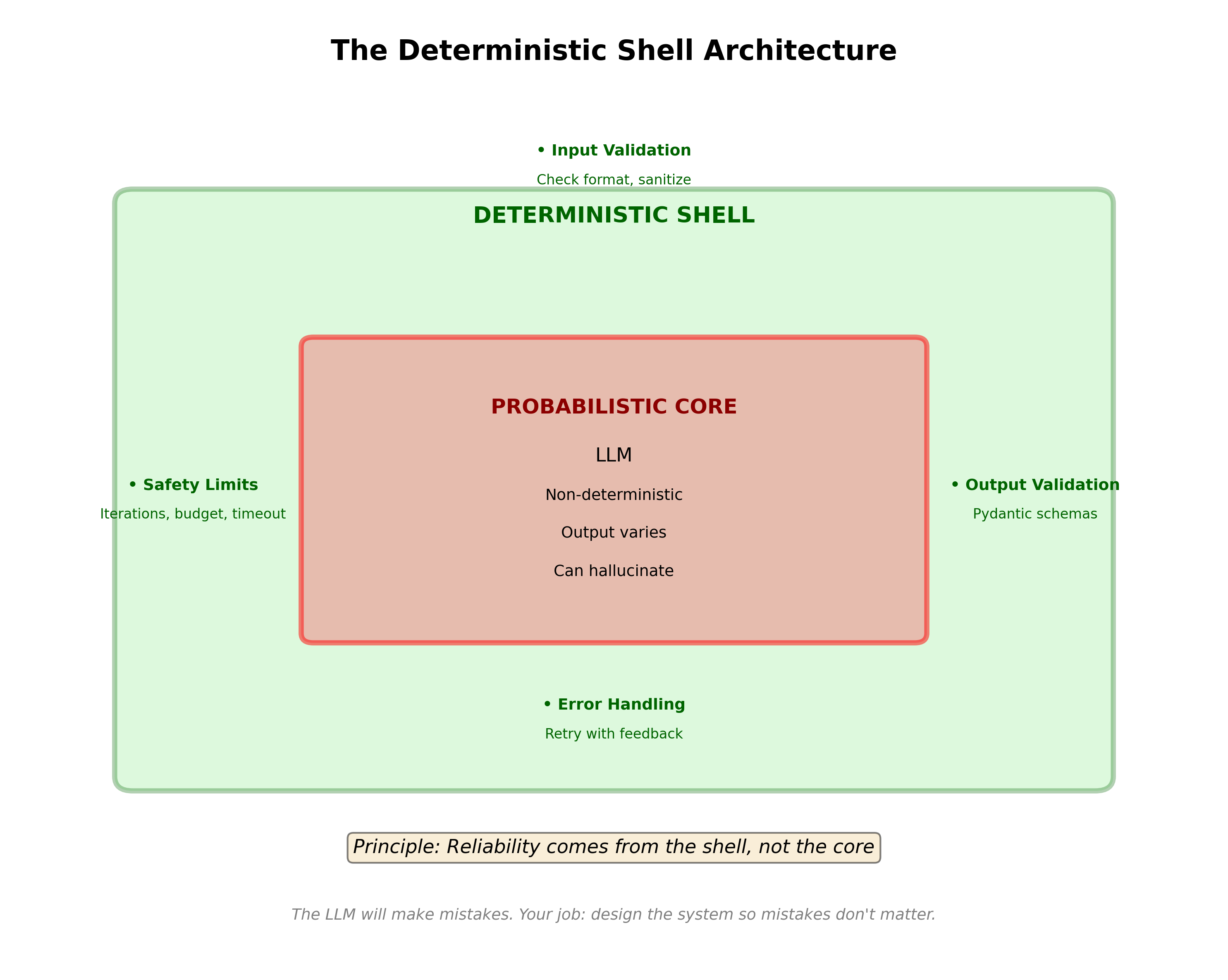

If we want to build safe agents for the enterprise, we must stop treating them like magic and start treating them like untrusted components.

We need to build a Deterministic Shell around the Probabilistic Core.

The architecture requires us to assume the LLM will make mistakes, and design the system so that those mistakes cannot burn down the building. This means implementing hard engineering guardrails—written in code, not prompts—that the AI cannot override.

Here is what that “Shell” looks like in Python. It wraps the AI’s tool call with Idempotency (so duplicate requests are ignored) and Circuit Breakers (to stop runaway loops).

from functools import wraps

import hashlib

import time

# import stripe # Giả định đã import thư viện stripe

class RefundCircuitBreaker:

def __init__(self, max_failures=3, timeout=60):

self.failures = {}

self.max_failures = max_failures

self.timeout = timeout

def __call__(self, func):

@wraps(func)

def wrapper(order_id, amount, **kwargs):

# 1. GENERATE IDEMPOTENCY KEY

key_data = f"{order_id}:{amount}:{kwargs.get('reason','')}"

idempotency_key = hashlib.sha256(key_data.encode()).hexdigest()

# 2. CHECK CIRCUIT BREAKER

if idempotency_key in self.failures:

last_failure, count = self.failures[idempotency_key]

if time.time() - last_failure < self.timeout:

if count >= self.max_failures:

raise ValueError(f"Circuit breaker open for {idempotency_key}")

try:

# 3. CALL THE API

result = stripe.Refund.create(

charge=order_id,

amount=amount,

idempotency_key=idempotency_key # Critical!

)

# Clear failures on success

if idempotency_key in self.failures:

del self.failures[idempotency_key]

return result

except stripe.error.APIConnectionError as e:

# 4. HANDLE FAILURE DETERMINISTICALLY

if idempotency_key in self.failures:

last_time, count = self.failures[idempotency_key]

self.failures[idempotency_key] = (time.time(), count + 1)

else:

self.failures[idempotency_key] = (time.time(), 1)

if self.failures[idempotency_key][1] >= self.max_failures:

raise ValueError("Circuit breaker activated - too many failures")

raise

return wrapper

@RefundCircuitBreaker(max_failures=2, timeout=300)

def process_refund(order_id, amount, reason):

return stripe.Refund.create(

charge=order_id,

amount=amount

)

Why This Code Matters

Notice what is happening here:

- Idempotency: Even if the LLM enters a panic loop and calls the function 50 times, the

idempotency_keyensures the downstream API handles it as one transaction. - Circuit Breaker: If the API fails 3 times, the Python code throws a

ValueErrorand stops the execution. The LLM is not asked for permission to stop. It is forced to stop.

This is not “Prompt Engineering.” This is Software Engineering.

Conclusion: Autonomy is a Bug, Not a Feature

When stakeholders ask for an “Agent,” they usually imagine a digital employee that figures everything out on its own. This vision is what sells venture capital rounds. It is also what kills production systems.

In my view, autonomy is a bug, not a feature.

Every degree of freedom you give an Agent increases the surface area for errors. If a task can be done with a linear script, do not build an Agent just to look cool.

We are moving from the library (Read-Only) to the real world (Write-Access). The “Intern” now has the company credit card. It’s time we started engineering the safeguards to match that reality.